Publicaties

Blink: An educational software debugger for Scratch

Abstract

The process of teaching children to code is often slowed down by the delay in providing feedback on each student’s code. Especially in larger classrooms, teachers often lack the time to give individual feedback to each student. That is why it is important to equip children with tools that can provide immediate feedback and thus enhance their independent learning skills. This article presents Blink, a debugging tool specifically designed for Scratch, the most commonly taught programming language for children. Blink comes with basic debugging features such as ‘step’ and ‘pause’, allowing precise monitoring of the execution of Scratch programs. It also provides users with more advanced debugging options, such as back-in-time debugging and programmable pause. A group of children attending an extracurricular coding class have been testing the usefulness of Blink. Feedback from these young users indicates that Blink helps them pinpoint programming errors more accurately, and they have expressed an overall positive view of the tool.

Citation

Strijbol, N., De Proft, R., Goethals, K., Mesuere, B., Dawyndt, P., & Scholliers, C. (2024). Blink: An educational software debugger for Scratch. SoftwareX, 25. https://doi.org/10.1016/j.softx.2023.101617.

Reproducing Predictive Learning Analytics in CS1: Toward Generalizable and Explainable Models for Enhancing Student Retention

Abstract

Predictive learning analytics has been widely explored in educational research to improve student retention and academic success in an introductory programming course in computer science (CS1). General-purpose and interpretable dropout predictions still pose a challenge. Our study aims to reproduce and extend the data analysis of a privacy-first student pass–fail prediction approach proposed by Van Petegem and colleagues (2022) in a different CS1 course. Using student submission and self-report data, we investigated the reproducibility of the original approach, the effect of adding self-reports to the model, and the interpretability of the model features. The results showed that the original approach for student dropout prediction could be successfully reproduced in a different course context and that adding self-report data to the prediction model improved accuracy for the first four weeks. We also identified relevant features associated with dropout in the CS1 course, such as timely submission of tasks and iterative problem solving. When analyzing student behaviour, submission data and self-report data were found to complement each other. The results highlight the importance of transparency and generalizability in learning analytics and the need for future research to identify other factors beyond self-reported aptitude measures and student behaviour that can enhance dropout prediction.

Citation

Zhidkikh, D., Heilala, V., Van Petegem, C., Dawyndt, P., Järvinen, M., Viitanen, S., De Wever, B., Mesuere, B., Lappalainen, V., Kettunen, L., & Hämäläinen, R. (2024). Reproducing Predictive Learning Analytics in CS1: Toward Generalizable and Explainable Models for Enhancing Student Retention. Journal of Learning Analytics, 1–21. https://doi.org/10.18608/jla.2024.7979

Dodona: Learn to code with a virtual co-teacher that supports active learning

Abstract

Dodona (dodona.ugent.be) is an intelligent tutoring system for computer programming. It provides real-time data and feedback to help students learn better and teachers teach better. Dodona is free to use and has more than 61 thousand registered users across many educational and research institutes, including 20 thousand new users in the last year. The source code of Dodona is available on GitHub under the permissive MIT open-source license. This paper presents Dodona and its design and look-and-feel. We highlight some of the features built into Dodona that make it possible to shorten feedback loops, and discuss an example of how these features can be used in practice. We also highlight some of the research opportunities that Dodona has opened up and present some future developments.

Citation

Van Petegem, C., Maertens, R., Strijbol, N., Van Renterghem, J., Van der Jeugt, F., De Wever, B., Dawyndt, P., & Mesuere, B. (2023). Dodona: Learn to code with a virtual co-teacher that supports active learning. SoftwareX, 24, 101578. https://doi.org/10.1016/j.softx.2023.101578

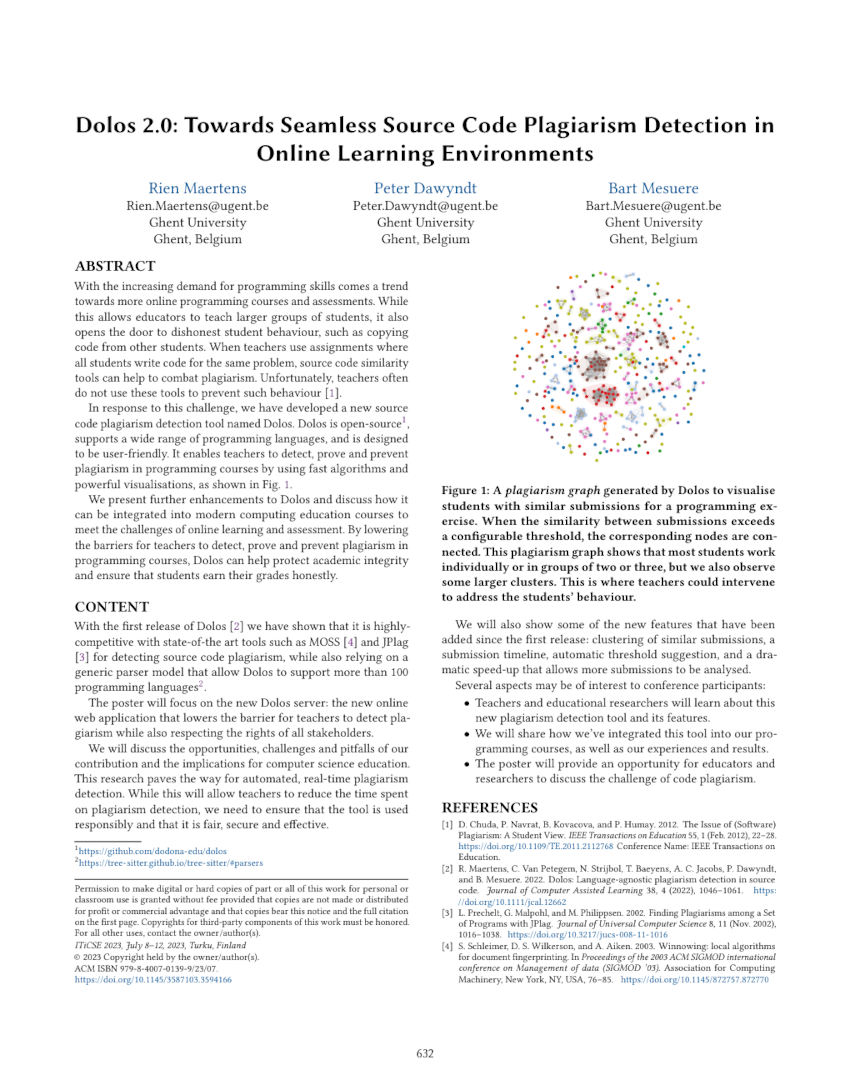

Dolos 2.0: Towards Seamless Source Code Plagiarism Detection in Online Learning Environments

Abstract

With the increasing demand for programming skills comes a trend towards more online programming courses and assessments. While this allows educators to teach larger groups of students, it also opens the door to dishonest student behaviour, such as copying code from other students. When teachers use assignments where all students write code for the same problem, source code similarity tools can help to combat plagiarism. Unfortunately, teachers often do not use these tools to prevent such behaviour. In response to this challenge, we have developed a new source code plagiarism detection tool named Dolos. Dolos is open-source, supports a wide range of programming languages, and is designed to be user-friendly. It enables teachers to detect, prove and prevent plagiarism in programming courses by using fast algorithms and powerful visualisations. We present further enhancements to Dolos and discuss how it can be integrated into modern computing education courses to meet the challenges of online learning and assessment. By lowering the barriers for teachers to detect, prove and prevent plagiarism in programming courses, Dolos can help protect academic integrity and ensure that students earn their grades honestly.

Citation

Maertens, R., Dawyndt, P., & Mesuere, B. (2023). Dolos 2.0: Towards Seamless Source Code Plagiarism Detection in Online Learning Environments. Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education V. 2, 632. https://doi.org/10.1145/3587103.3594166

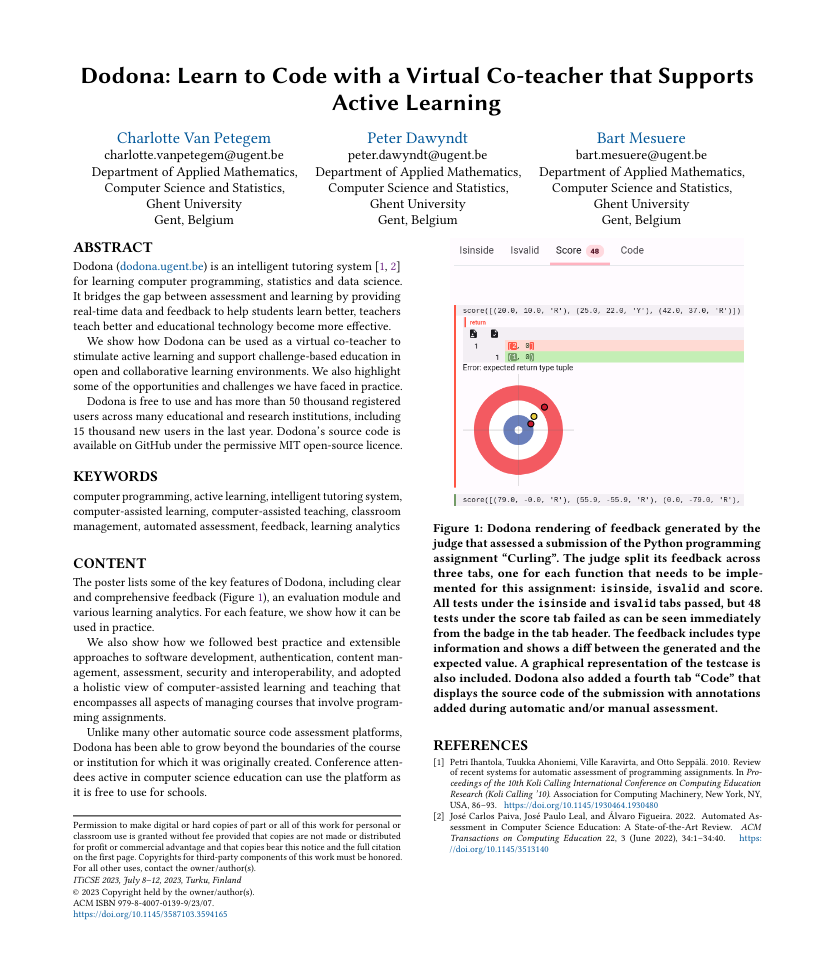

Dodona: Learn to Code with a Virtual Co-teacher that Supports Active Learning

Abstract

Dodona (dodona.ugent.be) is an intelligent tutoring system for learning computer programming, statistics and data science. It bridges the gap between assessment and learning by providing real-time data and feedback to help students learn better, teachers teach better and educational technology become more effective. We show how Dodona can be used as a virtual co-teacher to stimulate active learning and support challenge-based education in open and collaborative learning environments. We also highlight some of the opportunities and challenges we have faced in practice. Dodona is free to use and has more than 50 thousand registered users across many educational and research institutions, including 15 thousand new users in the last year. Dodona's source code is available on GitHub under the permissive MIT open-source license.

Citation

Van Petegem, C., Dawyndt, P., & Mesuere, B. (2023). Dodona: Learn to Code with a Virtual Co-teacher that Supports Active Learning. Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education V. 2, 633. https://doi.org/10.1145/3587103.3594165

Blink: An Educational Software Debugger for Scratch

Abstract

Debugging is an important aspect of programming. Most programming languages have some features and tools to facilitate debugging. As the debugging process is also frustrating, it requires good scaffolding, in which a debugger can be a useful tool. Scratch is a visual block-based programming language that is commonly used to teach programming to children, aged 10-14. It comes with its own integrated development environment (IDE), where children can edit and run their code. This IDE misses some of the tools that are available in traditional IDEs, such as a debugger. In response to this challenge, we developed Blink. Blink is a debugger for Scratch with the aim of being usable to the young audience that typically uses Scratch. We present the currently implemented features of the debugger, and the challenges we faced while implementing those, both from a user-experience standpoint and a technical standpoint.

Citation

Strijbol, N., Scholliers, C., & Dawyndt, P. (2023). Blink: An Educational Software Debugger for Scratch. Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education V. 2, 648. https://doi.org/10.1145/3587103.3594189

TESTed — An educational testing framework with language-agnostic test suites for programming exercises

Abstract

In educational contexts, automated assessment tools (AAT) are commonly used to provide formative feedback on programming exercises. However, designing exercises for AAT remains a laborious task or imposes limitations on the exercises. Most AAT use either output comparison, where the generated output is compared against an expected output, or unit testing, where the tool has access to the code of the submission under test. While output comparison has the advantage of being programming language independent, the testing capabilities are limited to the output. Conversely, unit testing can generate more granular feedback, but is tightly coupled with the programming language of the submission. In this paper, we introduce TESTed, which enables the best of both worlds: combining the granular feedback of unit testing with the programming language independence of output comparison. Educators can save time by designing exercises that can be used across programming languages. Finally, we report on using TESTed in educational practice.

Citation

Strijbol, N., Van Petegem, C., Maertens, R., Sels, B., Scholliers, C., Dawyndt, P., & Mesuere, B. (2023). TESTed—An educational testing framework with language-agnostic test suites for programming exercises. SoftwareX, 22, 101404. https://doi.org/10.1016/j.softx.2023.101404

Pass/fail prediction in programming courses

Abstract

We present a privacy-friendly early-detection framework to identify students at risk of failing in introductory programming courses at university. The framework was validated for two different courses with annual editions taken by higher education students (N = 2 080) and was found to be highly accurate and robust against variation in course structures, teaching and learning styles, programming exercises and classification algorithms. By using interpretable machine learning techniques, the framework also provides insight into what aspects of practising programming skills promote or inhibit learning or have no or minor effect on the learning process. Findings showed that the framework was capable of predicting students’ future success already early on in the semester.

Citation

Van Petegem, C., Deconinck, L., Mourisse, D., Maertens, R., Strijbol, N., Dhoedt, B., De Wever, B., Dawyndt, P., & Mesuere, B. (2022). Pass/Fail Prediction in Programming Courses. Journal of Educational Computing Research, 68–95. https://doi.org/10.1177/07356331221085595

Dolos: Language-agnostic plagiarism detection in source code

Abstract

Learning to code is increasingly embedded in secondary and higher education curricula, where solving programming exercises plays an important role in the learning process and in formative and summative assessment. Unfortunately, students admit that copying code from each other is a common practice and teachers indicate they rarely use plagiarism detection tools. We want to lower the barrier for teachers to detect plagiarism by introducing a new source code plagiarism detection tool (Dolos) that is powered by state-of-the art similarity detection algorithms, offers interactive visualizations, and uses generic parser models to support a broad range of programming languages. Dolos is compared with state-of-the-art plagiarism detection tools in a benchmark based on a standardized dataset. We describe our experience with integrating Dolos in a programming course with a strong focus on online learning and the impact of transitioning to remote assessment during the COVID-19 pandemic. Dolos outperforms other plagiarism detection tools in detecting potential cases of plagiarism and is a valuable tool for preventing and detecting plagiarism in online learning environments. It is available under the permissive MIT open-source license at https://dolos.ugent.be.

Citation

Maertens, R., Van Petegem, C., Strijbol, N., Baeyens, T., Jacobs, A. C., Dawyndt, P., & Mesuere, B. (2022). Dolos: Language-agnostic plagiarism detection in source code. Journal of Computer Assisted Learning. https://doi.org/10.1111/jcal.12662